It seems like every time you turn around, there's a new AI technology. Last month there were several, but the one I want to highlight today is Gemini 2.5 by Google. It was released the same day as the image generation feature in OpenAI's GPT‑4o, which of course meant that Gemini's arrival was overshadowed by the internet's newfound love of recreating everything in the Studio Ghibli art style.

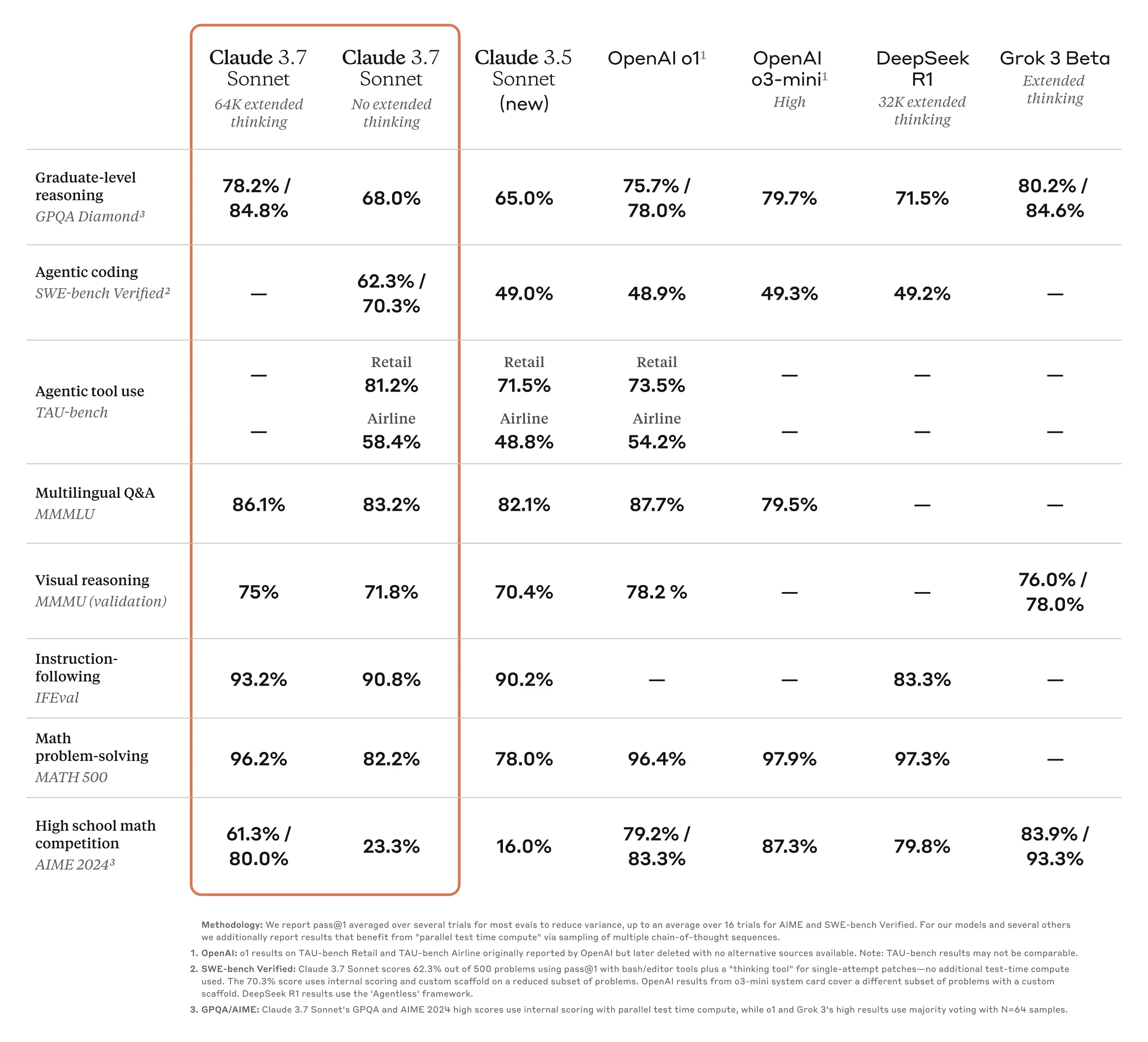

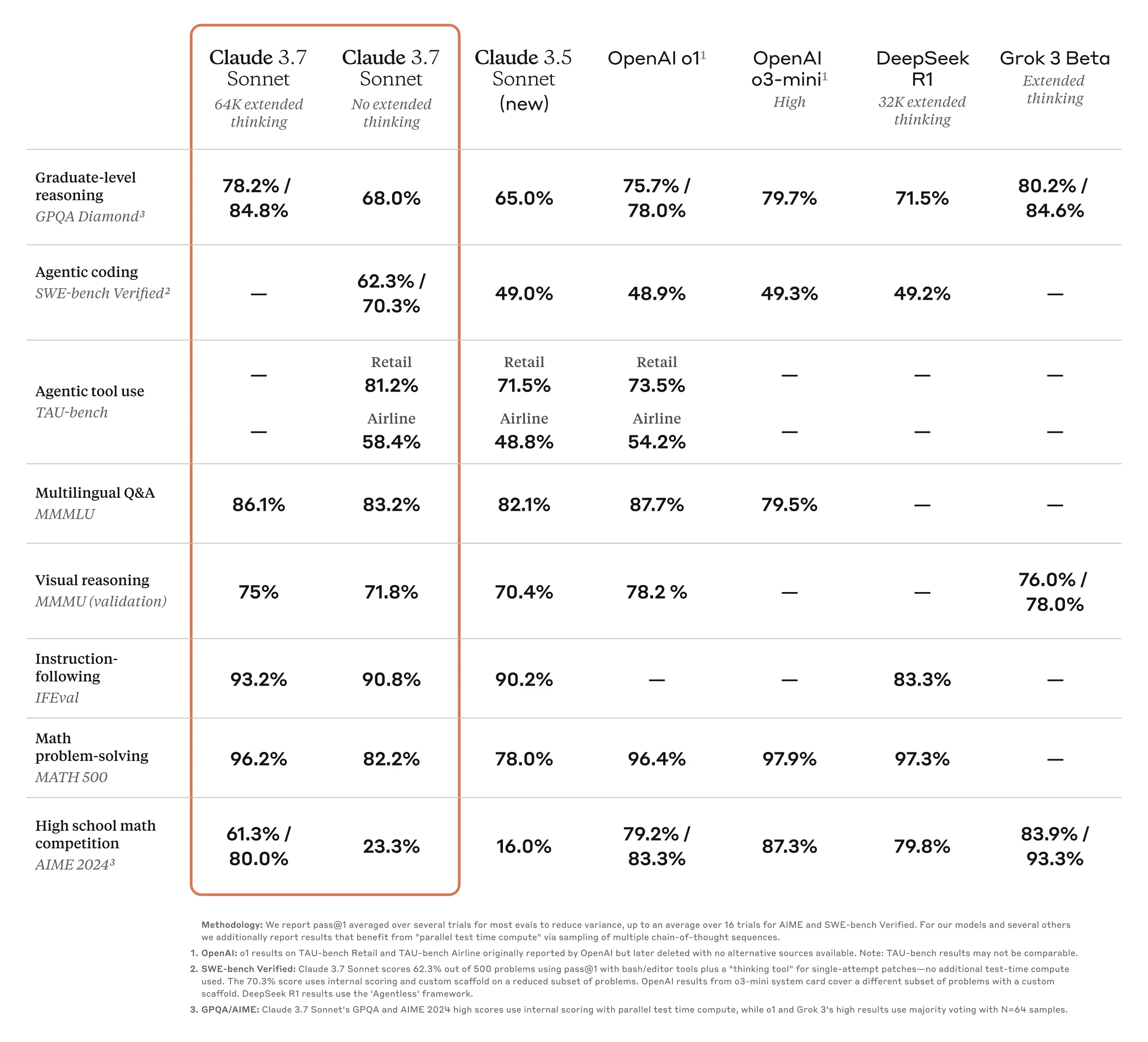

Results may vary.Even so, I heard Gemini was quite good so I thought I'd give it a whirl. My benchmark for testing the coding capabilities of any AI is to see how well it can build a dress-up game, assuming assets are provided. Attempts with the first publicly available ChatGPT and other early Large Language Models (LLMs) didn't go so well. The request sounds simple initially, but as you start determining the steps required to make such a game the hidden complexities begin to reveal themselves. The early LLMs would certainly try but were not able to provide code that functioned to a sufficient standard for the request. Gemini 2.5 however, had no problems talking me through the steps of my request. This included even letting it propose which language and frameworks to use based on suitability. Gemini is a 'thinking' model, which roughly translated means it can review its reasoning and alter its approach based on this. I like that you can see its thought process, and why it outputs what it does. As the instructions I gave were fairly vague, it asked clarifying questions to determine the most appropriate design and then got started. The code it generated did still have some errors. That said, when given the error message it was able to both explain the issue and provide a decent solution. The fixes worked well most of the time, and after a fairly short period of time we had a working dress up game proof of concept. So while it wasn't a flawless process, the game was set up much faster than my previous attempts to build it manually. Now, despite working in tech for so long I had never done any Python scripting before, so I thought for my second experiment it would be a good test to see if Gemini could still deliver even when the user was less familiar with the subject. I asked it to create a fairly complex record data migration script. It handled most of the requests for this well too, however it did sometimes ‘forget’ about previous requirements sent, as it would adjust the code as required for the latest request but occasionally it would do so in a way that impacted a previously requested feature. It handled the data migration script so well that I gave it an extra challenge - migrating poorly structured data from a PDF. Amazingly, it handled that task excellently - I was rather impressed. It would be remiss of me to not include a caveat here, that while the technology is improving rapidly it is still not currently at a point where you can forgo understanding the produced code and just leave everything up to AI. For personal projects like I described above AI is hugely beneficial in the initial phases of design and implementation, but its limitations of context mean that it is less effective during feature refinement and general maintenance and debugging, aka a huge part of development. For me, this was evident during the development process as I found that it kept forgetting session history. When I returned the following day to continue I had to get Gemini back up to speed with the current status before we could continue. Gemini is not actually the LLM most praised for its coding abilities - which at the time of writing appears to be Claude 3.7. When compared with other AI models in bench tests, it excelled across multiple areas.

Source: AnthropicDespite this, the fact that an AI that's considered 'average' for coding output could still produce what I requested to such a high quality is brilliant. If you are starting a personal project and want to use AI for brainstorming and initial development, it's well worth giving Gemini a go.